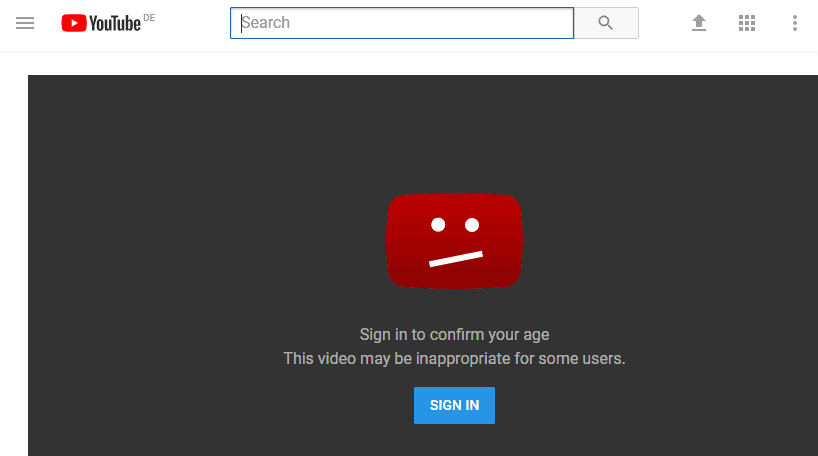

This Video May Be Inappropriate For Some Users

A growing trend of videos labeled with warnings like "This Video May Be Inappropriate For Some Users" is raising questions about content moderation, freedom of expression, and the potential impact on viewers, particularly younger audiences.

The phenomenon, observed across various social media platforms including YouTube, TikTok, and X (formerly Twitter), necessitates a deeper examination of the policies and practices employed by these companies, as well as the psychological effects of exposure to potentially disturbing content.

Content Warnings: A Necessary Evil?

Content warnings serve as a form of preemptive notification, alerting viewers to the presence of potentially offensive, graphic, or otherwise upsetting material. These warnings can cover a wide range of topics, including violence, sexual content, depictions of self-harm, and hate speech.

The implementation of content warnings is often driven by platform guidelines, legal requirements, and the pressure to maintain a safe and welcoming environment for users. However, the effectiveness and consistency of these warnings are frequently debated.

According to a report by the Pew Research Center, a significant percentage of social media users have encountered content that they found disturbing or offensive. The study also revealed varying levels of satisfaction with the platforms' responses to reported content.

The "Who, What, Where, When, Why, and How" of Inappropriate Content

Who is posting the content? The sources vary widely, ranging from individual users to established media outlets. What constitutes "inappropriate" content? The definition is subjective and often depends on the specific platform's policies, community standards, and the viewer's individual sensitivities.

Where are these videos being posted? The trend is observed across major social media platforms. When did this trend start gaining traction? While content warnings have existed for some time, their increased prevalence and scrutiny are relatively recent developments, likely spurred by heightened awareness of mental health and online safety.

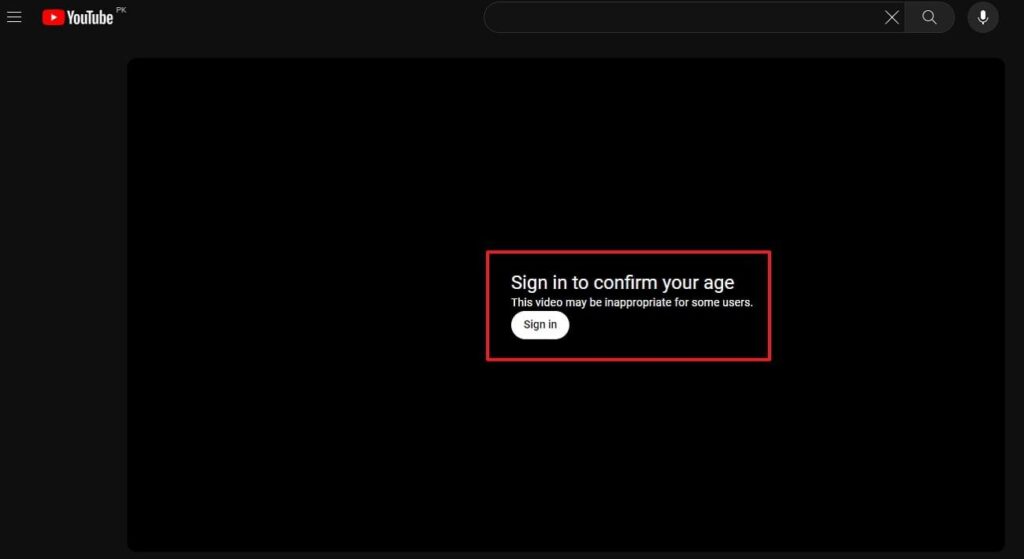

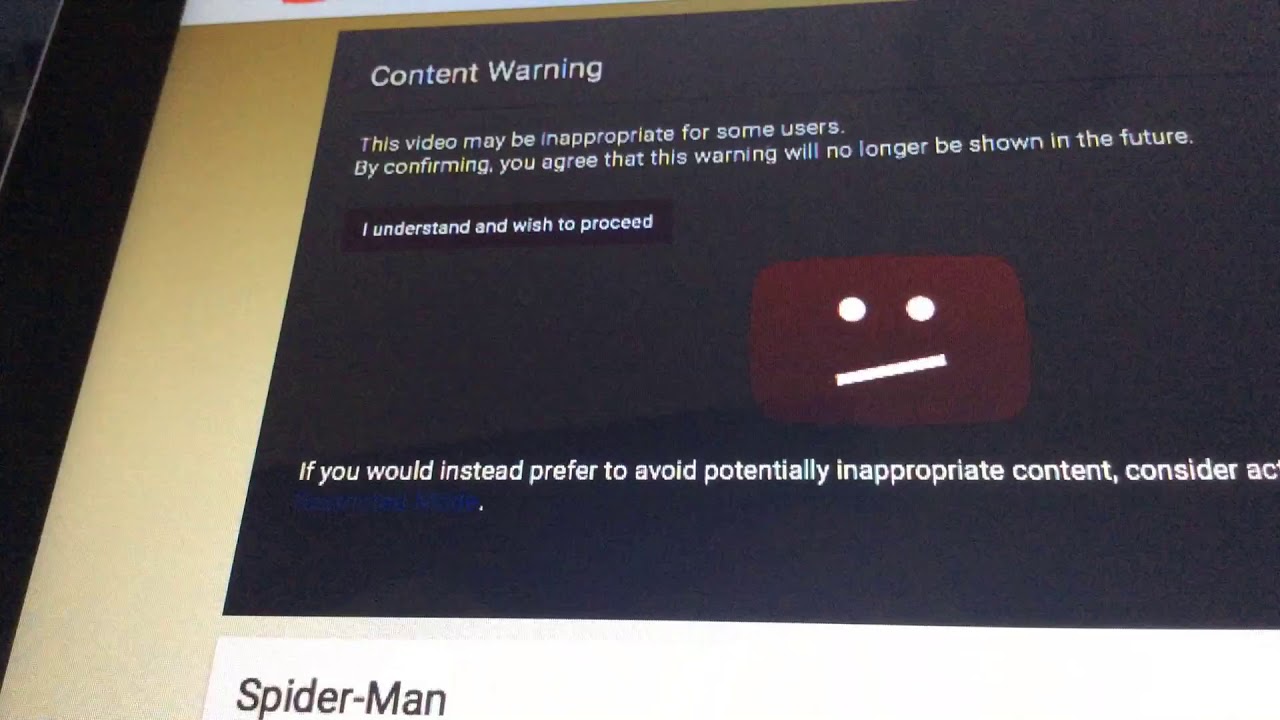

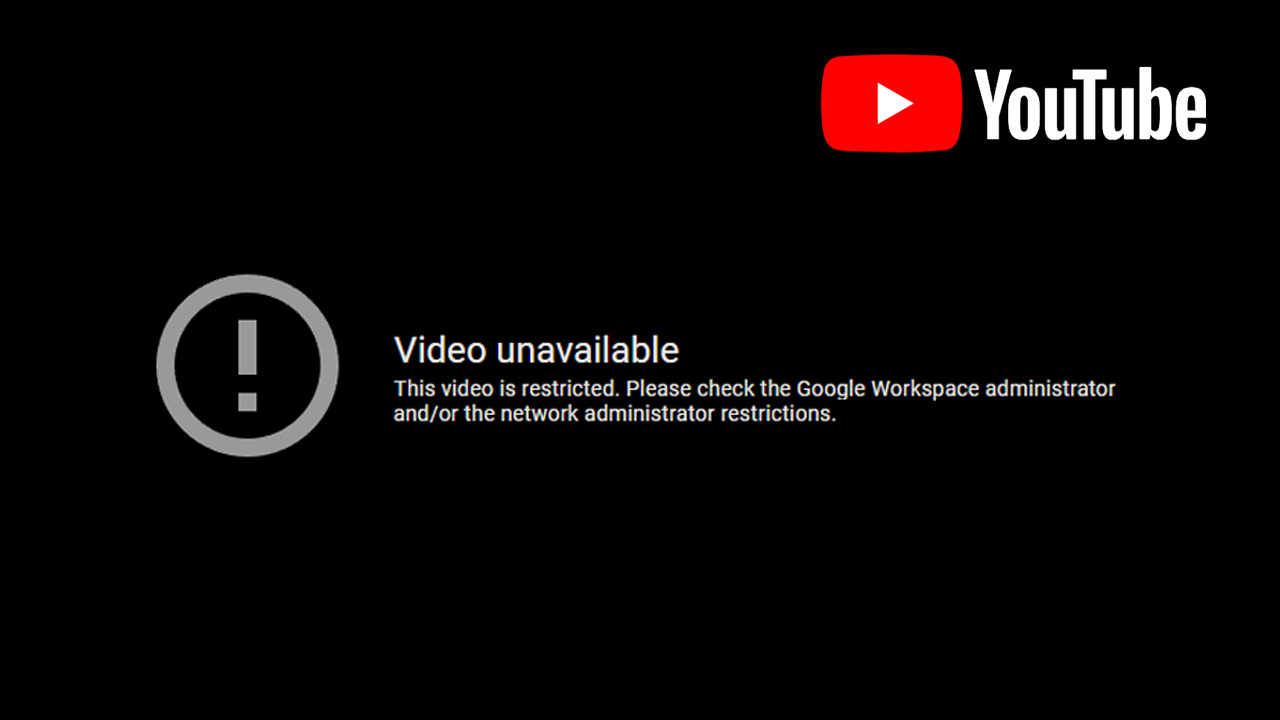

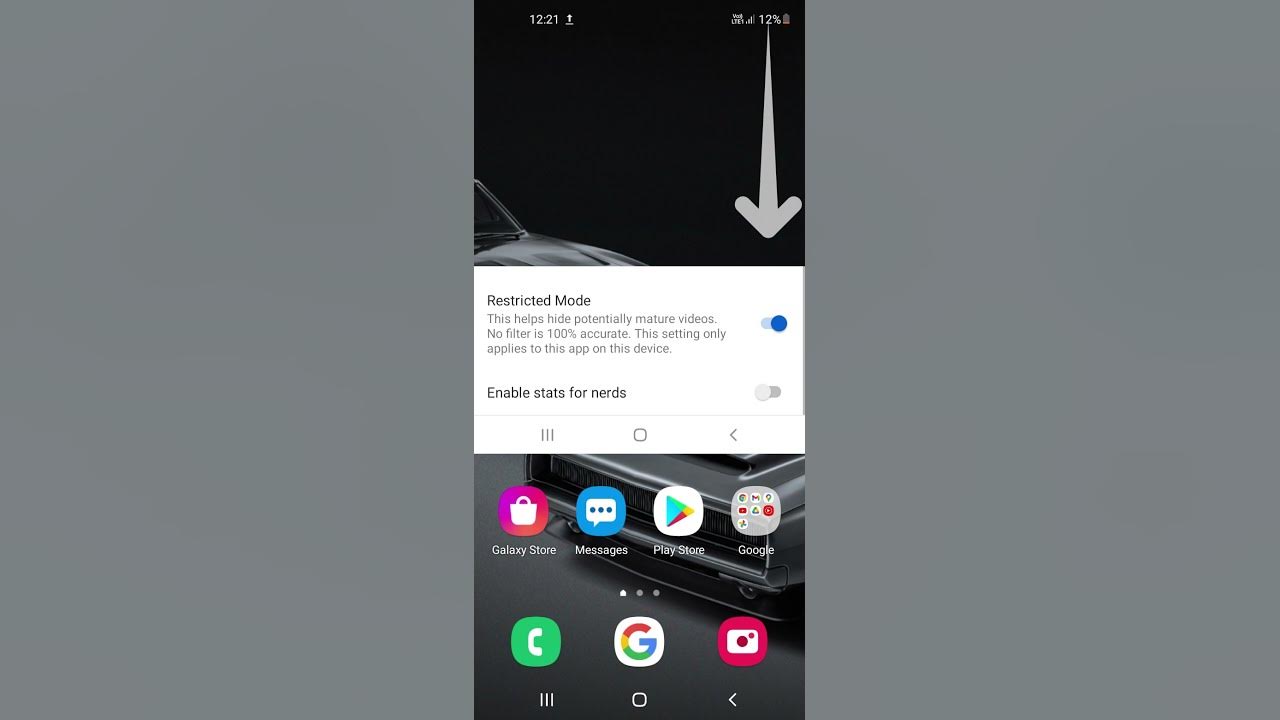

Why are these warnings used? Platforms use them to mitigate legal risks, protect users, and maintain a positive brand image. How are these warnings implemented? The methods vary, ranging from simple text overlays to age restrictions and click-through warnings requiring user acknowledgement.

The Impact on Viewers

Exposure to potentially disturbing content can have a range of psychological effects, especially on vulnerable individuals. These effects can include anxiety, fear, desensitization, and in some cases, even post-traumatic stress.

Dr. Emily Carter, a clinical psychologist specializing in the impact of social media, notes that "repeated exposure to violent or disturbing content can normalize such behavior, leading to a decrease in empathy and an increase in aggressive tendencies."

The debate extends to whether content warnings actually deter viewers or, conversely, pique their curiosity, leading them to seek out the warned material. This phenomenon, known as the "forbidden fruit effect," suggests that warnings can sometimes backfire.

Freedom of Expression vs. User Safety

The use of content warnings raises complex questions about the balance between freedom of expression and user safety. Advocates for free speech argue that overzealous content moderation can stifle creativity and limit access to important information.

Conversely, proponents of stricter content controls emphasize the responsibility of platforms to protect their users from harmful content, especially children and adolescents. Finding a middle ground that respects both principles remains a significant challenge.

Some critics argue that content warnings are often used as a substitute for more substantive content moderation efforts. By simply labeling a video as "inappropriate," platforms may be shirking their responsibility to actively remove or downrank harmful content.

The Role of Algorithms and AI

Algorithms play a crucial role in determining which videos users see and how content warnings are applied. These algorithms are designed to personalize the user experience, but they can also inadvertently expose users to content that they find disturbing.

The use of artificial intelligence (AI) to detect and flag potentially inappropriate content is becoming increasingly common. However, AI-powered systems are not foolproof and can sometimes make mistakes, leading to both false positives (incorrectly flagging benign content) and false negatives (failing to flag harmful content).

The transparency and accountability of these algorithms are also subjects of ongoing debate. Users often have limited visibility into how these systems work and how decisions are made about content moderation.

Moving Forward

Addressing the challenges posed by videos labeled "This Video May Be Inappropriate For Some Users" requires a multi-faceted approach. This includes strengthening platform policies, improving content moderation practices, and promoting media literacy among users.

Collaboration between social media platforms, policymakers, researchers, and mental health professionals is essential to develop effective strategies for mitigating the risks associated with exposure to potentially disturbing content. Open dialogue and transparency are key to finding solutions that protect both freedom of expression and user well-being.

Ultimately, the goal is to create a digital environment where users can engage with content responsibly and safely, while also preserving the principles of free speech and access to information.