What Is The Average Iq For Adults

The pursuit of understanding human intelligence has captivated scientists and the public alike for over a century. At the heart of this quest lies the Intelligence Quotient, or IQ, a numerical representation of an individual's cognitive abilities relative to their peers. But what does it really mean to be "average," and how is that average defined in the context of IQ scores?

This article delves into the intricacies of adult IQ, examining the standardized measurement, the factors that influence it, and the ongoing debates surrounding its interpretation and significance. We will explore the established norms, the shifting landscape of intelligence research, and the cautions necessary when interpreting IQ scores as a definitive measure of human potential.

Defining the Average IQ

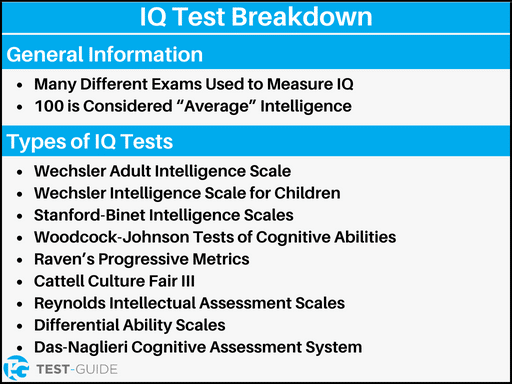

The concept of an "average IQ" is intrinsically linked to the way these tests are designed and standardized. Modern IQ tests, such as the Wechsler Adult Intelligence Scale (WAIS) and the Stanford-Binet, are normed against a representative sample of the population.

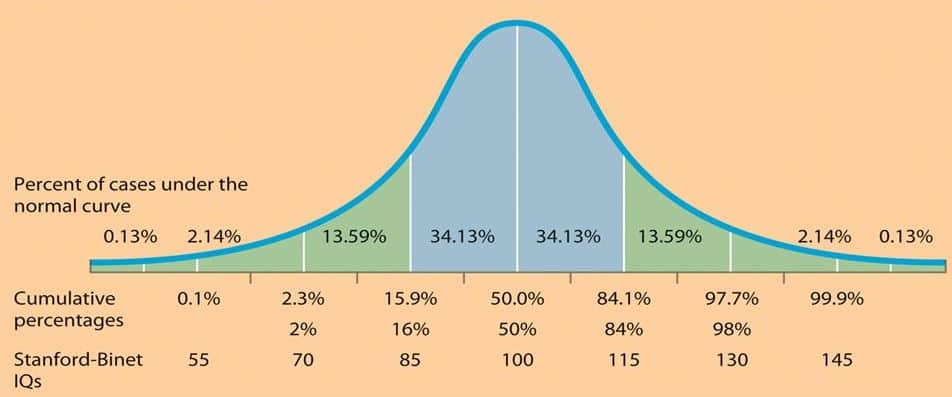

This process involves administering the test to a large, diverse group and adjusting the scoring so that the mean (average) score is set at 100, with a standard deviation of 15.

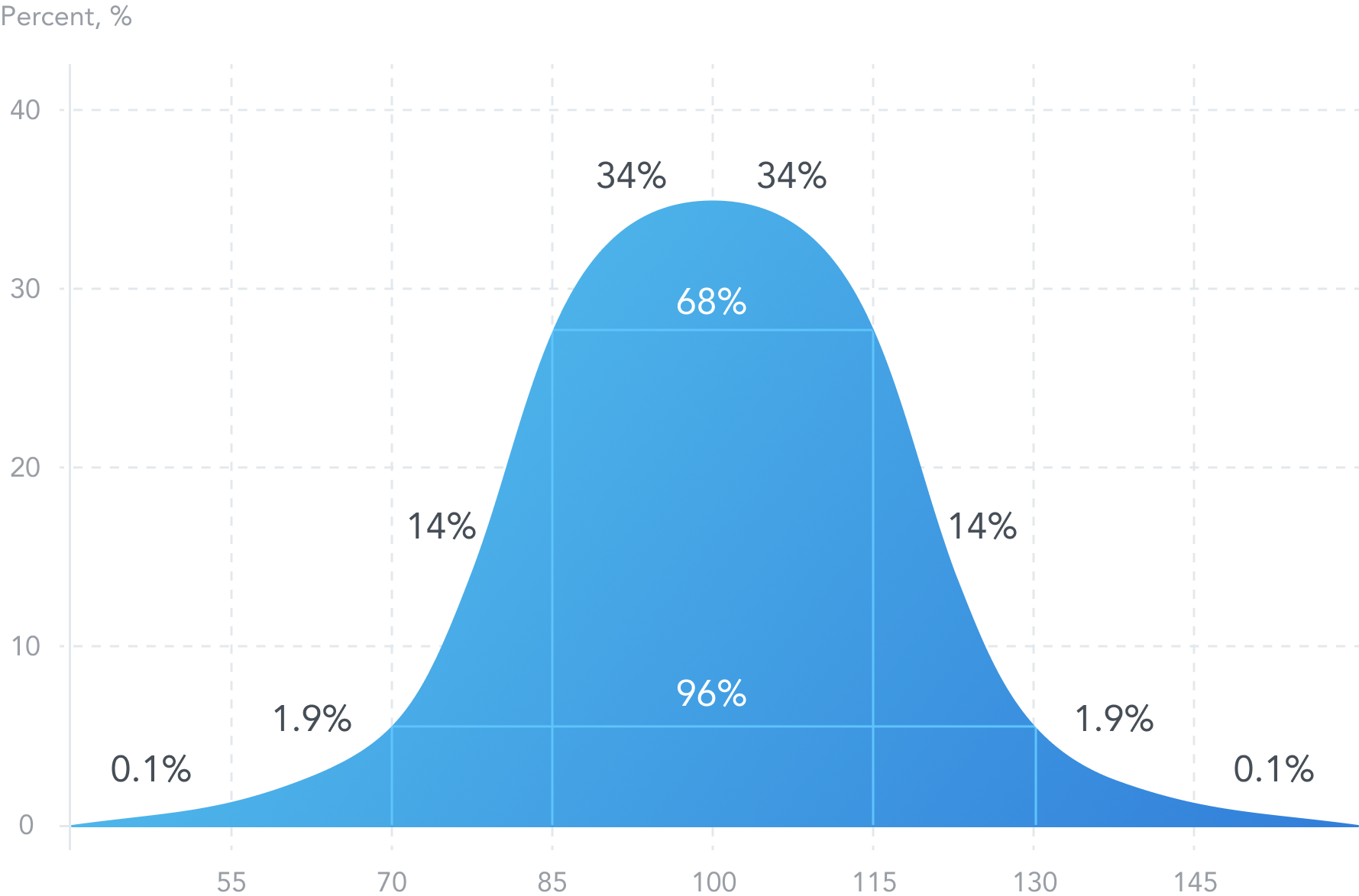

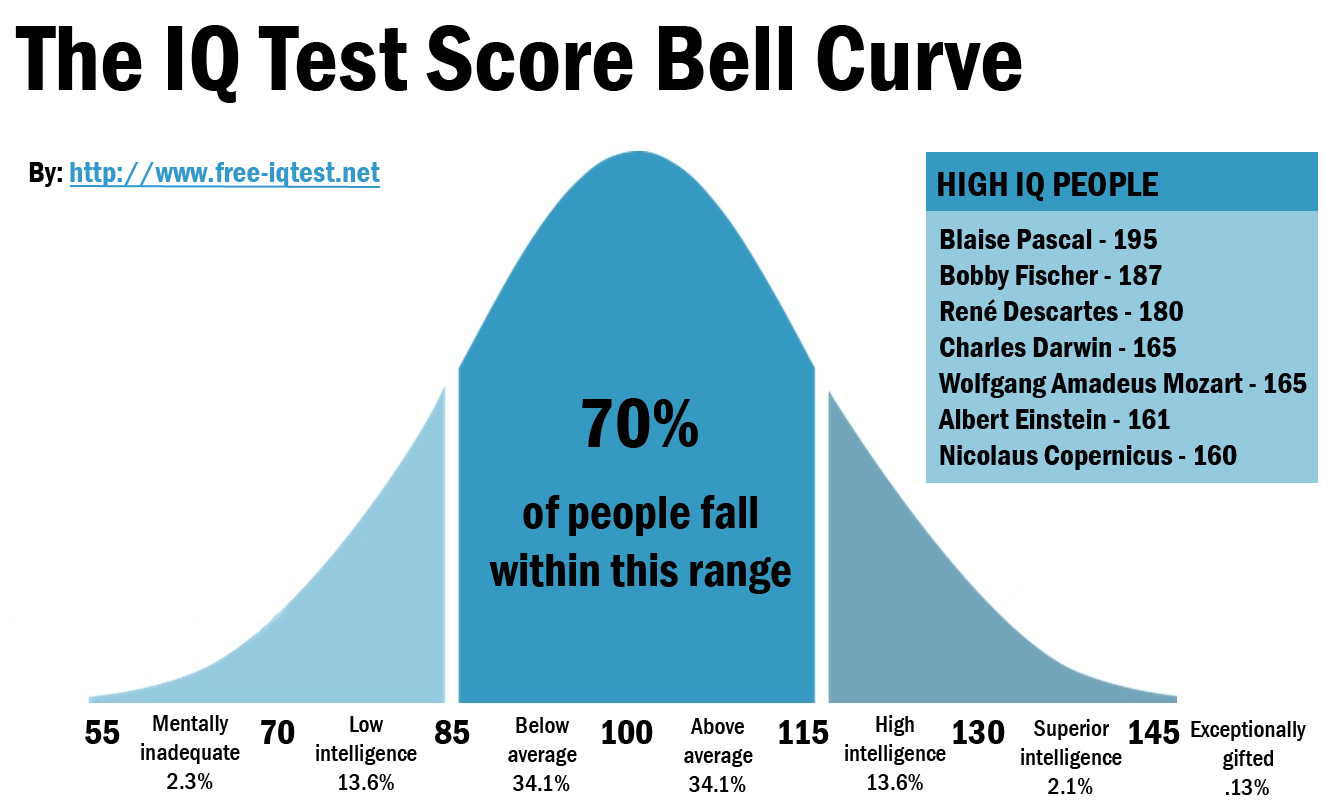

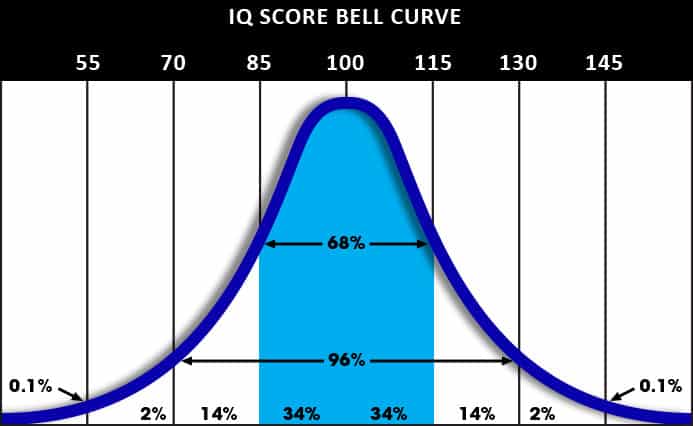

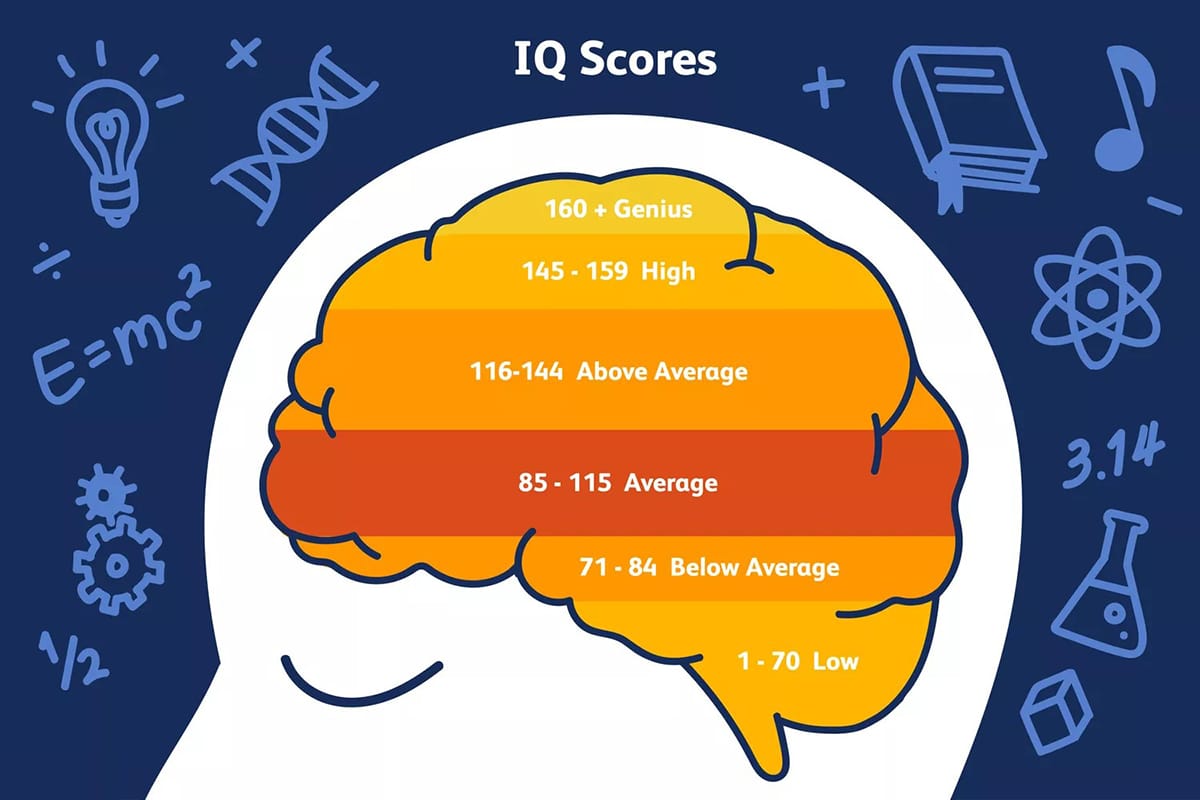

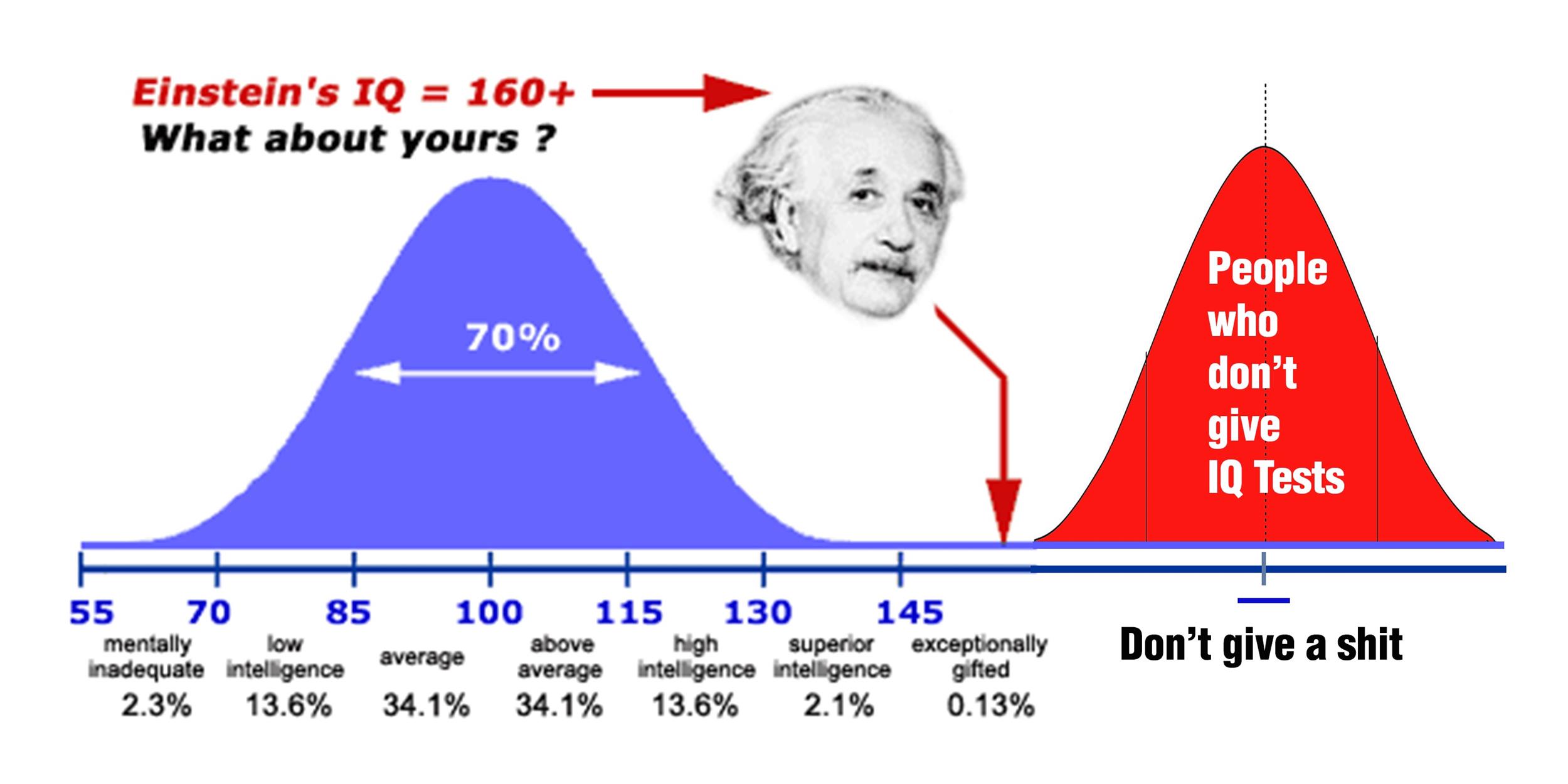

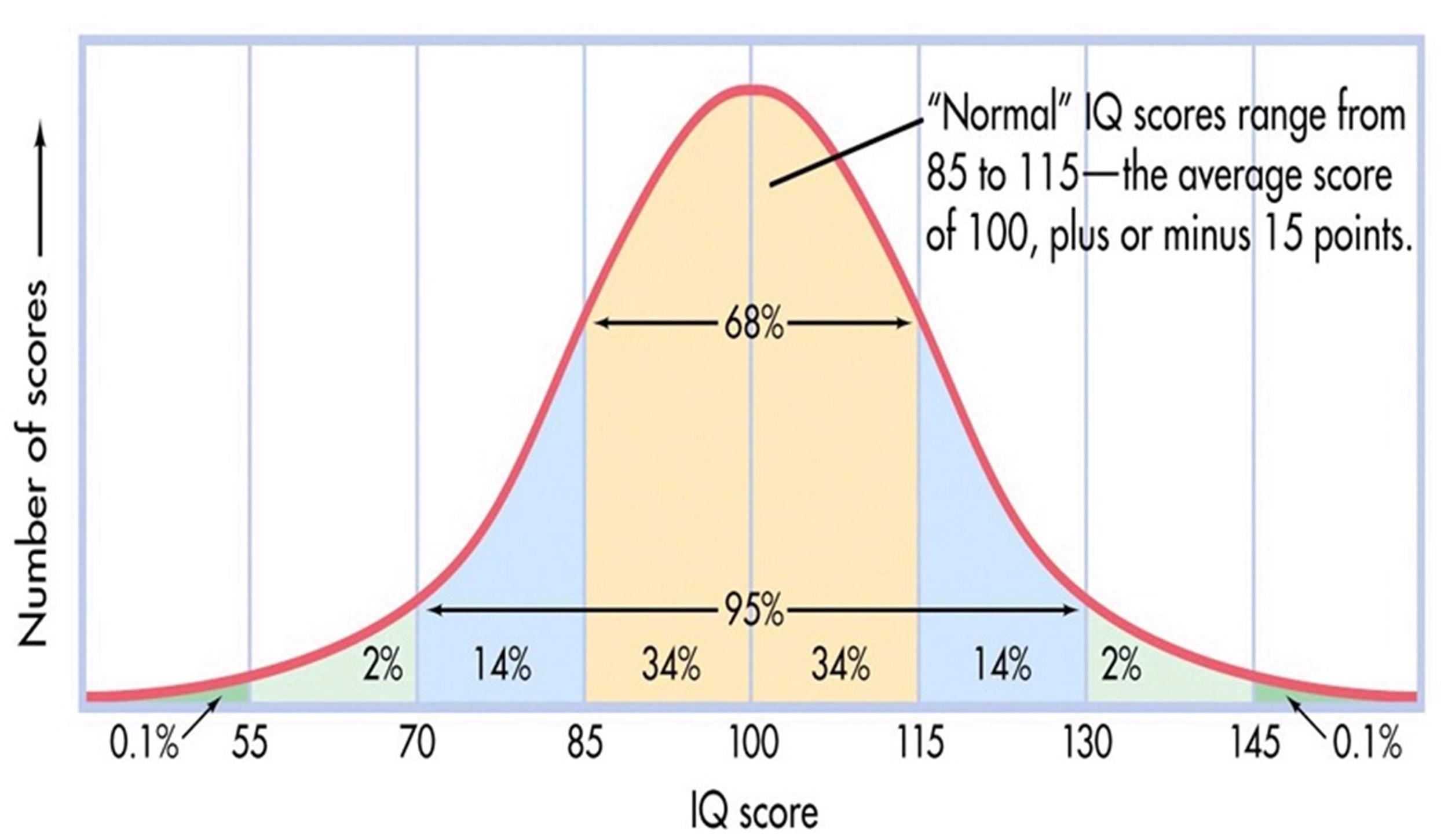

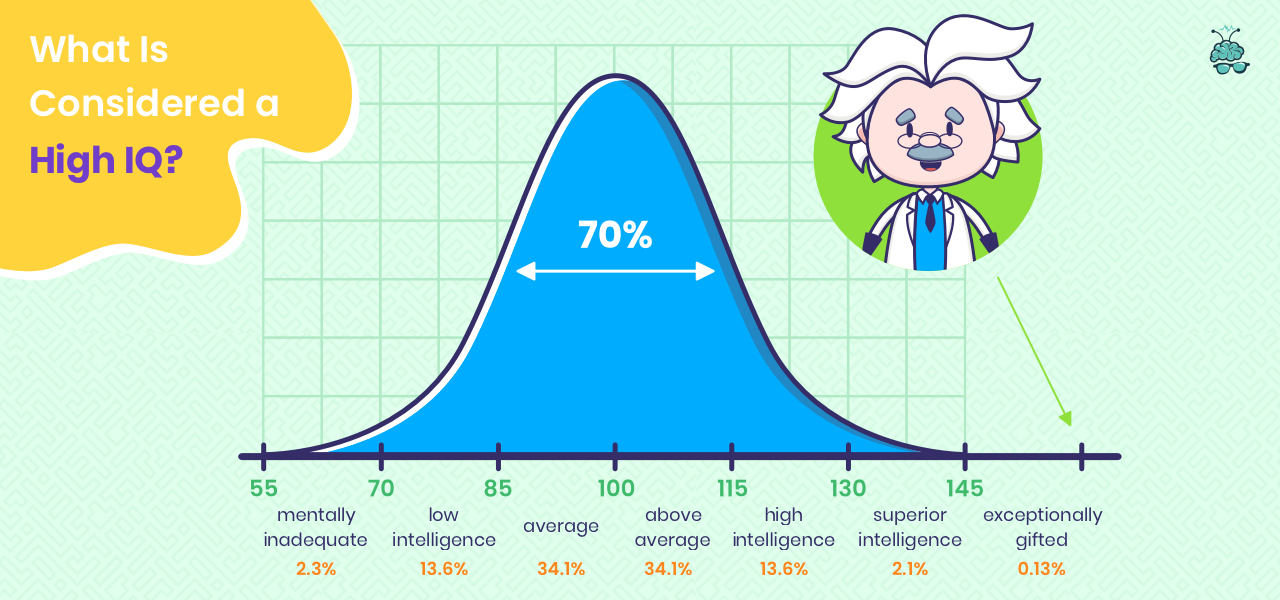

Therefore, by definition, the average IQ for adults is 100. Scores falling within one standard deviation of the mean, between 85 and 115, are generally considered to be within the normal or average range of intelligence.

Understanding the Standard Deviation

The standard deviation is a crucial statistical concept for interpreting IQ scores. It indicates the spread or variability of scores around the mean.

A standard deviation of 15 means that approximately 68% of the population will score between 85 and 115 on an IQ test. This range represents the "average" intellectual functioning for the majority of adults.

About 95% of the population will score within two standard deviations of the mean, between 70 and 130. Scores outside this range are considered to be significantly below or above average.

Factors Influencing IQ Scores

While IQ tests aim to measure cognitive abilities, numerous factors can influence an individual's score. These factors range from genetic predispositions to environmental influences and socioeconomic circumstances.

Research suggests that both genetics and environment play a significant role in shaping intelligence. Studies on twins, particularly those raised apart, have provided evidence for the heritability of IQ, with estimates ranging from 50% to 80%.

However, environmental factors such as nutrition, education, access to healthcare, and exposure to stimulating experiences also exert a considerable influence on cognitive development and IQ scores.

The Flynn Effect

One of the most intriguing phenomena related to IQ is the Flynn effect, named after researcher James R. Flynn. The Flynn effect refers to the observed increase in average IQ scores over time.

Studies have shown that IQ scores have been rising steadily throughout the 20th and 21st centuries. This suggests that environmental factors, such as improved nutrition, better education, and increased cognitive stimulation from technology and media, have a positive impact on cognitive abilities.

However, the Flynn effect presents challenges for interpreting IQ scores over long periods. An IQ score of 100 today might not represent the same level of cognitive ability as an IQ score of 100 several decades ago.

Limitations and Criticisms of IQ Tests

Despite their widespread use, IQ tests are not without their limitations and criticisms. Some critics argue that IQ tests primarily measure specific types of intelligence, such as logical-mathematical and verbal-linguistic abilities, while neglecting other important aspects of intelligence, such as emotional intelligence, creativity, and practical skills.

Furthermore, cultural biases in test design and administration can lead to inaccurate or unfair assessments of individuals from diverse backgrounds. Standardized tests may not adequately capture the cognitive abilities of individuals who have not had the same educational opportunities or who come from different cultural contexts.

It is crucial to interpret IQ scores with caution and to avoid using them as the sole measure of an individual's worth or potential. IQ scores should be considered as one piece of information among many when evaluating a person's cognitive abilities and overall capabilities.

IQ tests are just one tool, and should not define a person's potential.

Beyond the Number: A Holistic View of Intelligence

Increasingly, researchers and educators are advocating for a more holistic view of intelligence that goes beyond the confines of IQ scores. This perspective recognizes that intelligence is a multifaceted construct encompassing a wide range of cognitive, emotional, and social abilities.

Alternative theories of intelligence, such as Howard Gardner's theory of multiple intelligences, propose that individuals possess different types of intelligence, including musical, spatial, bodily-kinesthetic, interpersonal, and intrapersonal intelligences. These theories emphasize the importance of recognizing and nurturing diverse talents and skills.

A holistic approach to intelligence acknowledges the limitations of standardized tests and emphasizes the importance of considering an individual's strengths, weaknesses, and unique potential in a broader context.

The Future of Intelligence Assessment

The field of intelligence assessment is continuously evolving, with ongoing research aimed at developing more comprehensive and culturally sensitive measures of cognitive abilities. Advances in neuroscience and cognitive psychology are providing new insights into the neural basis of intelligence and the factors that contribute to cognitive development.

Future assessments may incorporate more dynamic and adaptive testing methods that can better capture an individual's learning potential and problem-solving skills. Furthermore, the integration of technology, such as computer-based assessments and artificial intelligence, could lead to more personalized and efficient evaluations of cognitive abilities.

Ultimately, the goal of intelligence assessment should be to provide valuable information that can be used to support individuals in reaching their full potential, rather than simply assigning a numerical label based on a single test score.

While the average adult IQ remains at 100 by definition, the pursuit of understanding intelligence is far from static. Embracing a broader perspective that considers the complexities of human cognition and the limitations of standardized tests is crucial for fostering a more inclusive and equitable approach to education and human development.

:max_bytes(150000):strip_icc()/2795284-article-what-is-the-average-iq-5aa00dbc1d640400378bdacb.png)

![What Is The Average Iq For Adults IQ Scale For Adults - [Guide]](https://personalityanalysistest.com/wp-content/uploads/2022/01/iq-scale-for-adults.jpg)