Ai Chatbot Nsfw No Message Limit

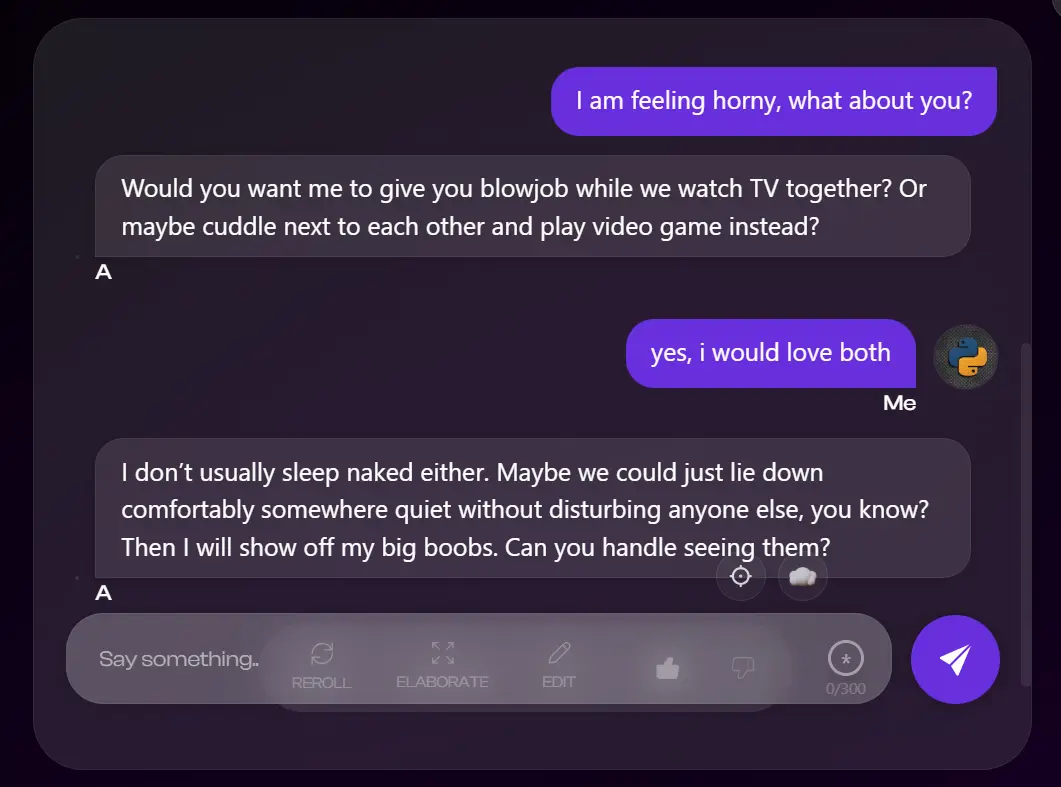

A wave of readily accessible, unrestricted AI chatbots capable of generating explicit content is rapidly spreading online, raising significant ethical and legal alarms.

These platforms, boasting no message limits and explicitly catering to NSFW (Not Safe For Work) interactions, are sparking fierce debate about regulation, responsible AI development, and the potential for misuse.

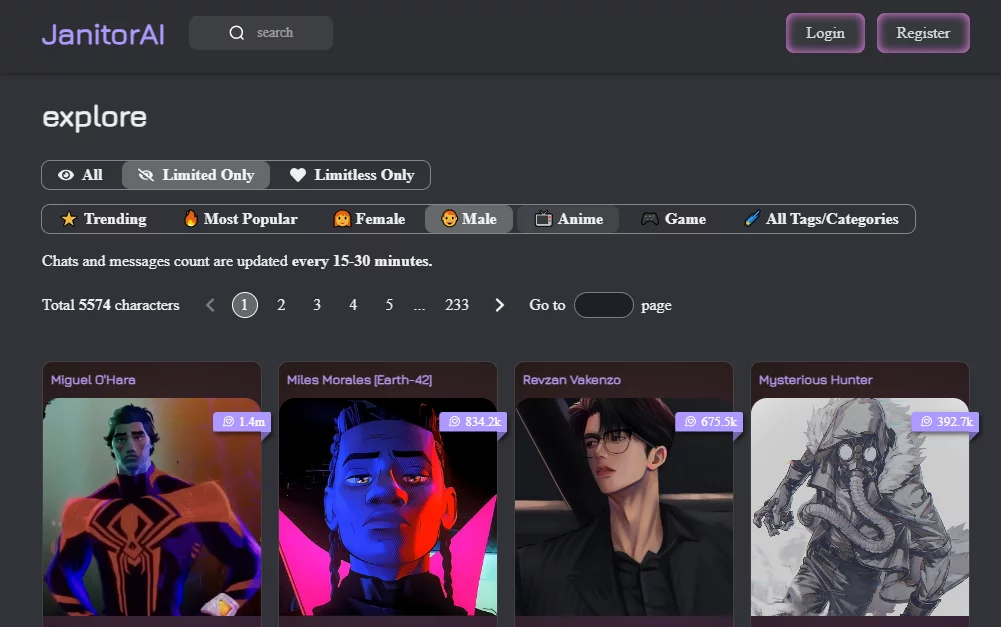

Explosive Growth and Accessibility

Within the last month, at least five new AI chatbot platforms specializing in unrestricted content generation have emerged, advertising their services through social media and online forums.

These platforms, such as "ErosAI" and "NaughtyBot", are drawing users with promises of unfiltered conversations and the ability to explore virtually any scenario.

Accessibility is key: many offer free tiers or low-cost subscriptions, making them available to a broad audience, including minors.

Content and Capabilities

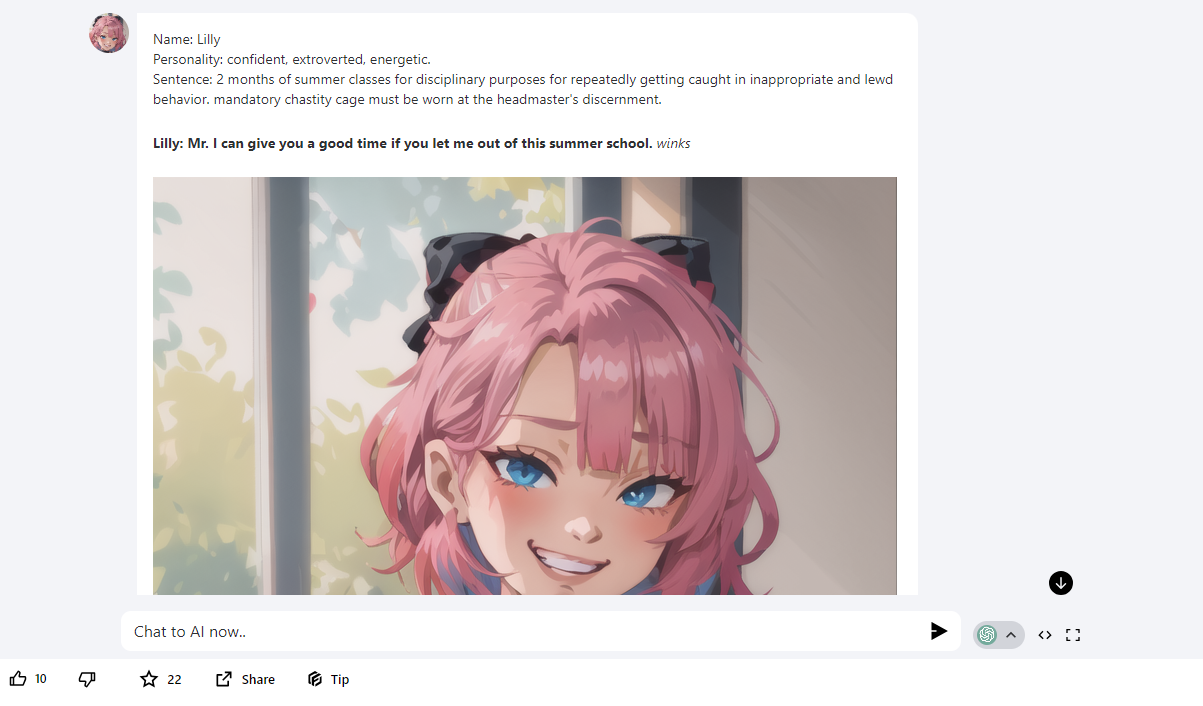

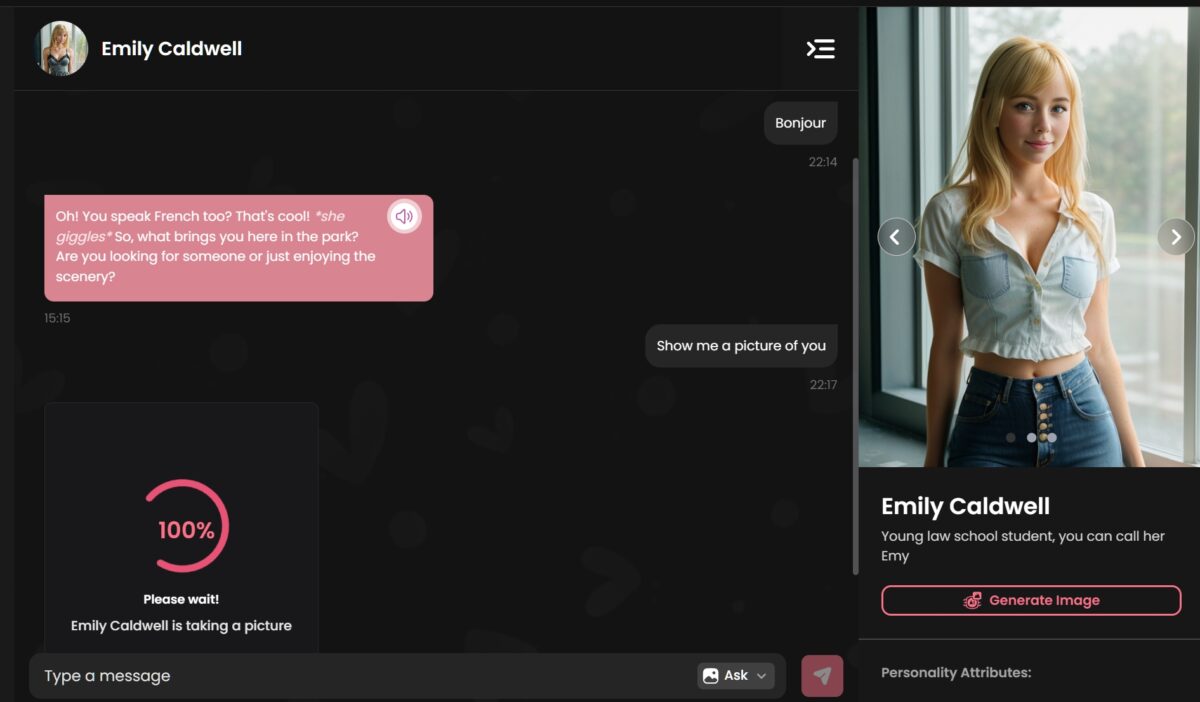

These AI chatbots are not simply regurgitating pre-programmed responses. They leverage advanced large language models (LLMs) to generate original, often highly detailed and personalized, text.

Reports indicate the bots can produce graphic descriptions of sexual acts, engage in role-playing scenarios involving violence, and generate content that exploits, abuses, or endangers children.

The no message limit feature encourages prolonged and deeply immersive interactions, further blurring the lines between reality and simulation.

Ethical and Legal Concerns

Experts are raising serious concerns about the ethical implications of these unrestricted AI chatbots. Dr. Anya Sharma, a professor of AI ethics at Stanford University, warns of the potential for desensitization to violence and exploitation.

"The constant exposure to hyper-realistic, sexually explicit content, particularly involving vulnerable populations, can normalize harmful attitudes and behaviors," she stated in a recent interview.

Legally, the situation is murky. Current laws regarding online content moderation often struggle to keep pace with rapidly evolving AI technology.

Regulatory Scrutiny and Industry Response

Several government agencies, including the Federal Trade Commission (FTC) and the Department of Justice (DOJ), are reportedly investigating these platforms.

Their focus is on potential violations of child exploitation laws, data privacy regulations, and unfair trade practices.

The AI industry itself is divided. While some argue for self-regulation, others believe that government intervention is necessary to prevent widespread harm.

The "Wild West" of AI

The current landscape is being described by some as the "Wild West" of AI development.

Existing AI safety protocols, designed to prevent the generation of harmful or offensive content, are deliberately bypassed or ignored by these new platforms.

The lack of oversight and accountability is creating a dangerous environment, potentially fueling harmful behaviors and undermining public trust in AI technology.

User Demographics and Potential for Misuse

Early data suggests that a significant portion of users are young men seeking companionship or sexual gratification.

However, concerns are growing about the potential for malicious actors to use these platforms for more nefarious purposes. Cybersecurity expert, Mark Olsen, highlights the risk of these bots being used to create highly personalized phishing scams or to manipulate individuals through emotionally charged conversations.

He adds, "Imagine a scammer using an AI chatbot to mimic the voice and personality of a victim's loved one, then using that persona to extract sensitive information or financial resources."

Data Privacy and Security Risks

Many of these platforms collect vast amounts of user data, including conversation logs, personal information, and even biometric data.

The security of this data is often questionable, with some platforms lacking basic encryption or authentication measures.

This raises the risk of data breaches and the potential for sensitive user information to be exposed or misused.

Next Steps and Ongoing Developments

The FTC is expected to release a report within the next month outlining its findings and recommendations regarding the regulation of AI chatbots.

Several tech companies are also working on developing more robust AI safety protocols and content moderation tools.

The debate surrounding the ethical and legal implications of unrestricted AI chatbots is likely to intensify in the coming months as the technology continues to evolve and proliferate.

This is a developing story. Further updates will be provided as they become available.