Current Always Flows From Positive To Negative

Imagine a gentle stream, crystal clear, cascading down a mountainside. This stream, a lifeblood of the valley below, follows a path dictated by gravity, always flowing from higher ground to lower. Similarly, in the world of electricity, a fundamental concept governs the movement of current, a principle often taken for granted yet crucial to understanding how our devices function.

This article delves into the conventional understanding that electrical current flows from positive to negative, exploring the historical context, practical implications, and ongoing relevance of this concept in the modern world.

The Conventional Current: A Journey From Positive to Negative

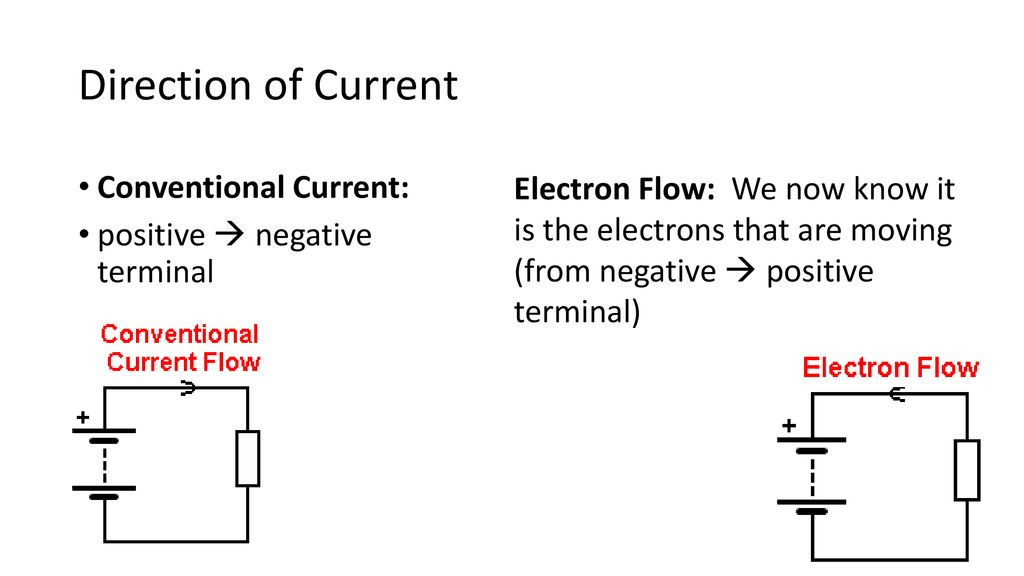

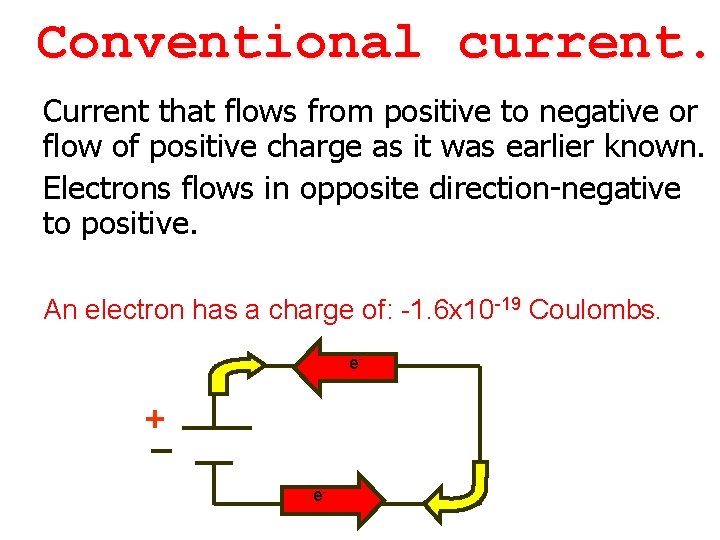

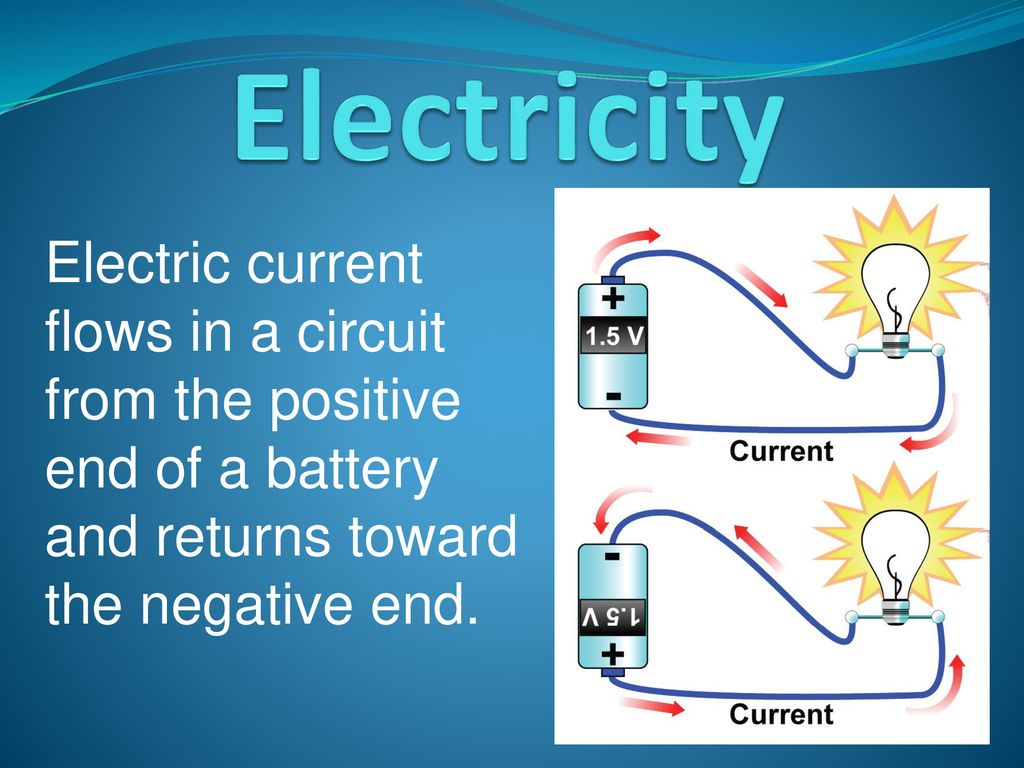

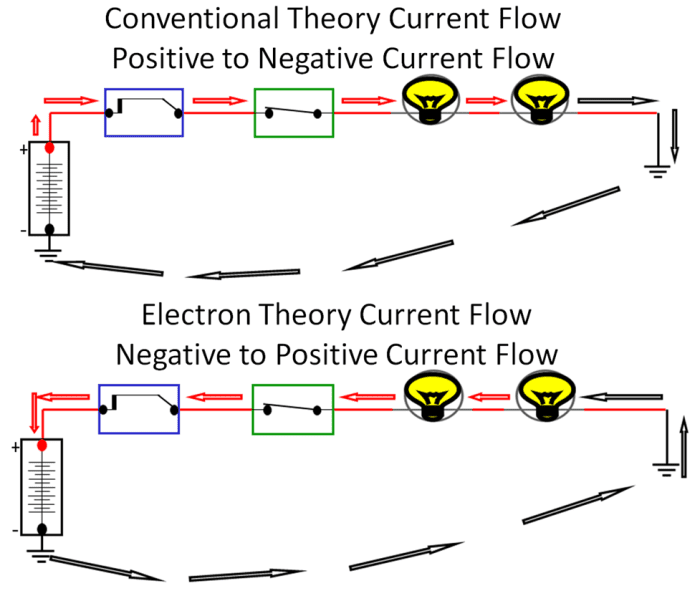

The notion that current flows from positive to negative is known as conventional current. It's a foundational principle ingrained in electrical engineering, taught in classrooms, and used in circuit design across the globe.

But why this particular direction? The answer lies in the early days of electrical science, long before the discovery of the electron.

Back in the 18th and 19th centuries, scientists like Benjamin Franklin were exploring the mysteries of electricity. Franklin, through his famous kite experiment, proposed that electricity was a single "fluid" that flowed from one body to another. He arbitrarily defined the direction of this flow as from positive to negative.

Even though his understanding of the underlying mechanism was incomplete, his convention stuck. It became the standard upon which electrical theory was built.

The Electron's Discovery and a Slight Detour

Later, in the late 19th century, the electron was discovered by J.J. Thomson. This subatomic particle, with its negative charge, was found to be the actual carrier of electrical charge in most conductive materials.

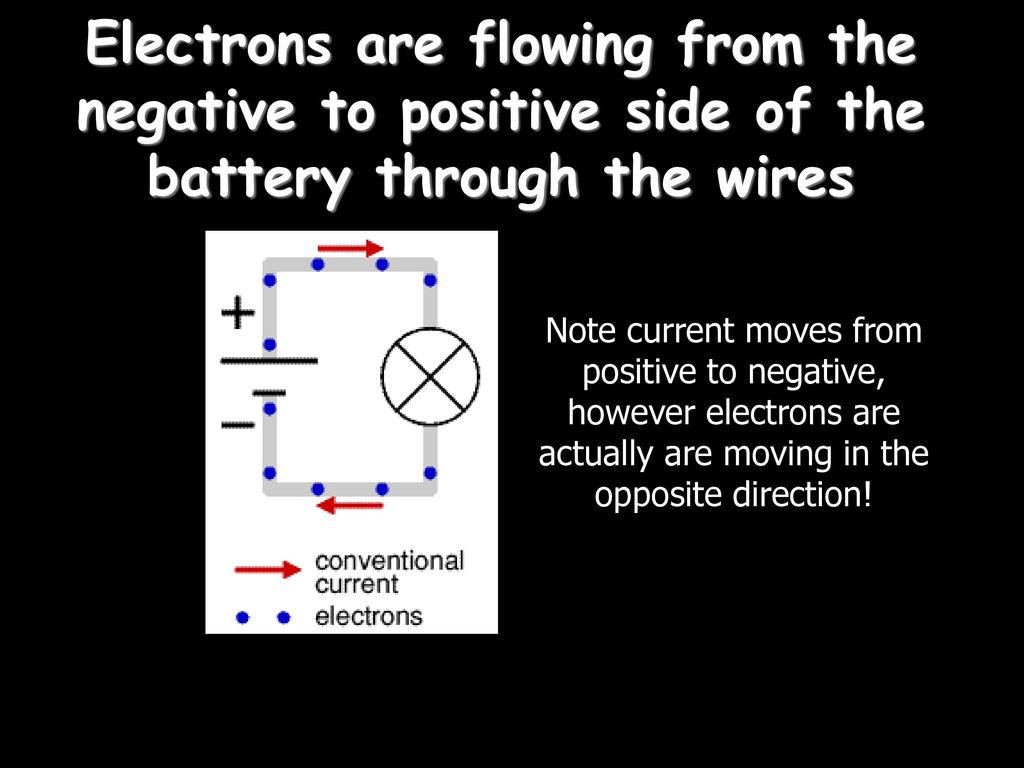

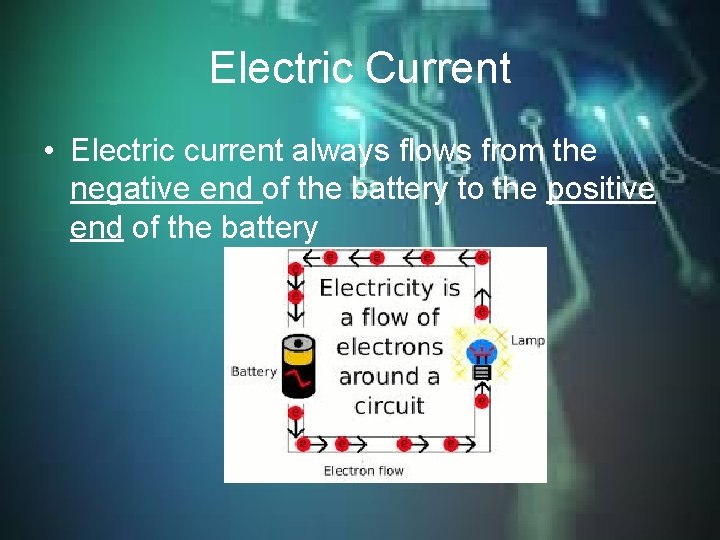

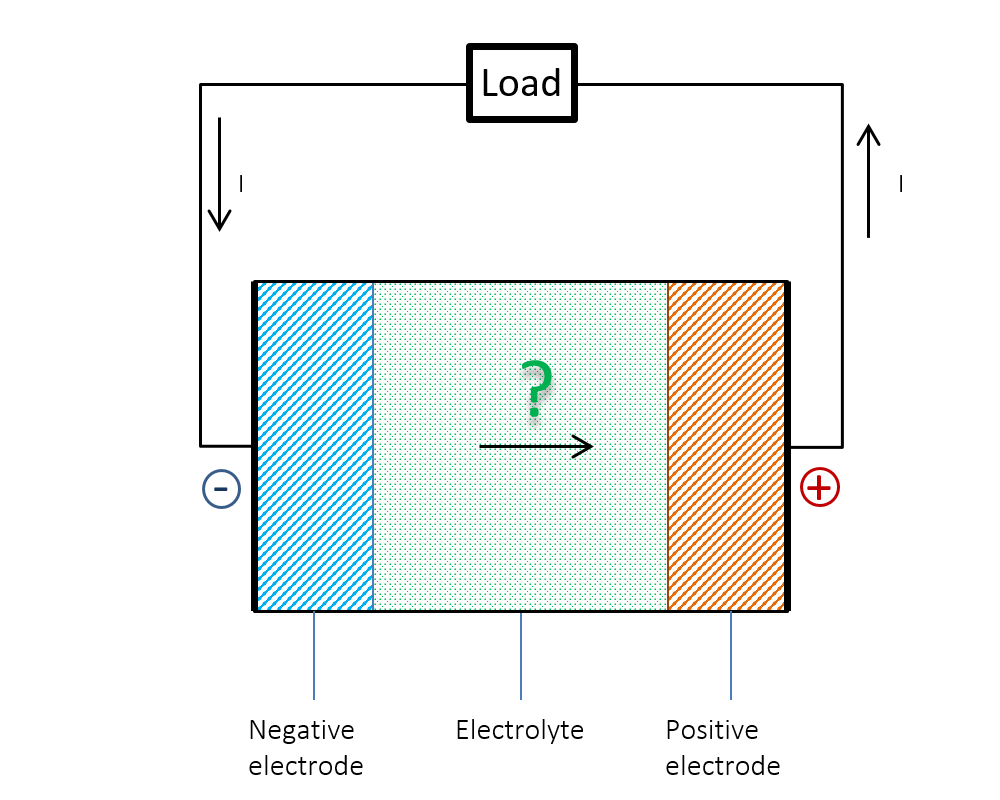

This discovery revealed that electrons actually flow from the negative terminal of a battery or power source to the positive terminal. This is known as electron flow.

This is where things can get a little confusing. If electrons, the actual charge carriers, move from negative to positive, why do we still use the conventional current direction?

The answer is simple: inertia. The conventional current direction was so deeply embedded in existing theories, equations, and circuit designs that changing it would have been incredibly disruptive.

Imagine rewriting every electrical engineering textbook and redesigning every circuit based on a reversed current direction. The effort would have been monumental, with minimal practical benefit.

Therefore, the electrical engineering community decided to stick with the conventional current direction, even though it's technically "wrong" in terms of the actual movement of electrons.

Why Conventional Current Still Matters

Despite the existence of electron flow, conventional current remains the dominant model for several crucial reasons.

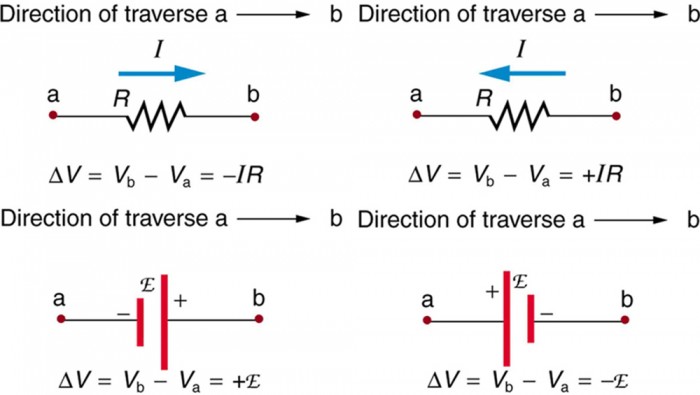

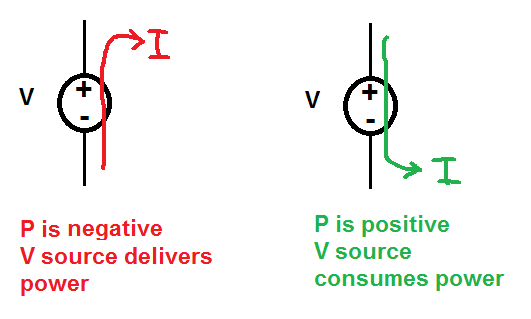

Firstly, it simplifies circuit analysis. Many circuit laws and equations, such as Kirchhoff's Laws, are based on the assumption of conventional current flow.

Using conventional current allows engineers to solve circuit problems without having to constantly account for the negative charge of electrons. This simplifies calculations and reduces the chance of errors.

Secondly, some electronic components, such as semiconductors, behave differently depending on the direction of current flow. Understanding conventional current is crucial for understanding how these components function.

For example, diodes allow current to flow easily in one direction (from positive to negative, according to convention) but block it in the opposite direction.

Finally, conventional current is the standard in most textbooks, software, and industry practices. It's the common language of electrical engineers, ensuring clear communication and collaboration.

Real-World Applications and Implications

The concept of conventional current is not just theoretical; it has practical implications in various fields.

In electronics design, engineers use conventional current to analyze circuits, predict their behavior, and design new devices. From smartphones to power grids, every electronic system relies on the principles of conventional current.

In power systems, understanding the direction of current flow is critical for ensuring the safe and efficient distribution of electricity. Power engineers use this knowledge to design protective devices, such as circuit breakers, that prevent overloads and short circuits.

In renewable energy, understanding conventional current is essential for integrating solar panels and wind turbines into the grid. These sources generate electricity, and understanding the flow of that electricity is crucial for managing the grid's stability.

Furthermore, the proper understanding of conventional current is paramount in electrical safety. Knowing the direction of current flow is essential for identifying potential hazards and taking precautions to prevent electric shock.

"Electrical safety depends on a thorough understanding of how current behaves in different scenarios," emphasizes the National Electrical Safety Code (NESC).

Beyond the Circuit: A Reflection

The story of conventional current is a reminder that scientific understanding evolves over time. While our initial models may be imperfect, they can still be incredibly useful.

The persistence of conventional current, even after the discovery of electron flow, demonstrates the power of established conventions and the importance of practical considerations.

It also highlights the human element in science. Decisions are often made based on a balance of accuracy, practicality, and historical context.

As we continue to explore the frontiers of electrical engineering, from quantum computing to nanotechnology, it is important to remember the fundamental principles that have guided us thus far.

The flow of current from positive to negative, a concept born from early scientific inquiry, remains a cornerstone of our understanding and a testament to the enduring power of human ingenuity.