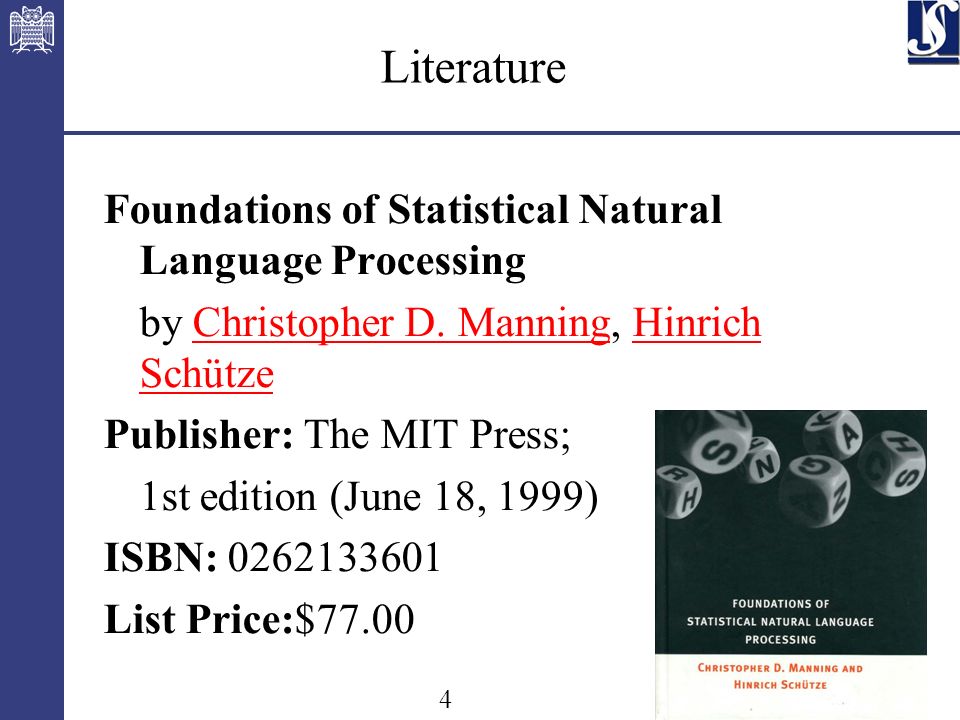

Foundations Of Statistical Natural Language Processing

In a world increasingly shaped by artificial intelligence, the unsung hero powering many advancements is Statistical Natural Language Processing (SNLP). From the predictive text on our phones to sophisticated translation tools, SNLP’s algorithms tirelessly sift through vast quantities of text data, extracting meaning and enabling machines to communicate in human-like ways. But beneath the surface of these seemingly magical applications lies a complex and evolving field, grappling with fundamental questions about language itself.

This article delves into the foundations of SNLP, exploring its core principles, key techniques, and the challenges it faces in an ever-evolving digital landscape. We will examine how SNLP has revolutionized fields like machine translation, sentiment analysis, and information retrieval, impacting industries from healthcare to finance.

The Rise of Statistical Approaches

Traditionally, Natural Language Processing (NLP) relied heavily on hand-crafted rules and linguistic theories. However, these rule-based systems proved brittle and struggled to handle the inherent ambiguity and variability of natural language.

The shift towards statistical methods in the late 20th century marked a paradigm shift. Instead of prescribing rules, SNLP leverages statistical models trained on massive datasets to learn patterns and relationships within language.

This data-driven approach allowed for more robust and adaptable systems, capable of handling real-world text with greater accuracy.

Core Techniques and Models

At the heart of SNLP lies a collection of statistical techniques. N-grams, for instance, are sequences of N words used to predict the next word in a sentence.

Hidden Markov Models (HMMs) are used for tasks like part-of-speech tagging, where the goal is to identify the grammatical role of each word in a sentence. These models assume that the observed sequence of words is generated by an underlying hidden Markov chain representing the sequence of part-of-speech tags.

Another vital tool is the probabilistic context-free grammar (PCFG), which assigns probabilities to different parse trees for a given sentence, allowing the system to choose the most likely interpretation.

Naive Bayes classifiers are also widely employed, particularly in sentiment analysis and text classification. Despite their simplicity, they often provide surprisingly accurate results, especially when dealing with large datasets.

The Impact of Machine Learning

The advent of machine learning, particularly deep learning, has further revolutionized SNLP. Neural networks, such as recurrent neural networks (RNNs) and transformers, have demonstrated remarkable abilities in capturing long-range dependencies and semantic nuances in text.

Word embeddings, like Word2Vec and GloVe, represent words as vectors in a high-dimensional space, where similar words are located closer to each other. These embeddings allow models to understand the relationships between words in a more nuanced way.

Transformer models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), have achieved state-of-the-art results on a wide range of NLP tasks. They are pre-trained on massive amounts of text data and can be fine-tuned for specific applications, making them highly versatile.

Applications Across Industries

SNLP has found widespread applications across various industries. In machine translation, statistical models have drastically improved the accuracy and fluency of translated text, breaking down communication barriers across languages.

Sentiment analysis, powered by SNLP, allows businesses to gauge customer opinions from social media posts and reviews, providing valuable insights for product development and marketing strategies.

Information retrieval systems, like search engines, rely heavily on SNLP to understand user queries and retrieve relevant documents. These systems use techniques like stemming, lemmatization, and term frequency-inverse document frequency (TF-IDF) to rank documents based on their relevance to the query.

In healthcare, SNLP is used to analyze electronic health records, identify potential drug interactions, and even predict patient outcomes. This helps improve the quality of care and reduce healthcare costs.

Challenges and Future Directions

Despite its successes, SNLP faces several challenges. One major challenge is dealing with the ambiguity and context-dependence of natural language.

Another challenge is the scarcity of labeled data for certain languages and domains. Low-resource languages, for example, lack the large datasets needed to train effective statistical models.

Researchers are actively working on addressing these challenges by developing new techniques for unsupervised and semi-supervised learning. They are also exploring ways to transfer knowledge from high-resource languages to low-resource languages through techniques like cross-lingual transfer learning.

Another promising area of research is the development of more explainable and interpretable SNLP models. As SNLP systems become more complex, it is increasingly important to understand how they make decisions, especially in high-stakes applications.

According to a 2023 report by *MarketsandMarkets*, the global NLP market is projected to reach $49.4 billion by 2027, driven by increasing demand for AI-powered solutions across industries.

The ethical implications of SNLP are also receiving increasing attention. Concerns about bias in training data, the potential for misuse of NLP technologies, and the impact on employment are all being actively debated.

Conclusion

Statistical Natural Language Processing has fundamentally transformed the way machines interact with human language. From its humble beginnings in rule-based systems to the sophisticated deep learning models of today, SNLP continues to evolve, driving innovation across countless industries.

As we generate more data and develop more powerful algorithms, the potential of SNLP to unlock the secrets of language and build truly intelligent machines is limitless. The field must continue to address the ethical concerns and ensure fairness and accountability in these powerful technologies.