Remove Negative Comments Regarding Background And Personal Profile

Online platforms are increasingly facing pressure to moderate user-generated content, not just for hate speech and misinformation, but also for negative comments pertaining to an individual's background and personal profile. This issue has sparked debates about freedom of speech versus the right to privacy and protection from online harassment.

The core of the debate revolves around the definition of what constitutes acceptable online discourse and the extent to which platforms should be responsible for the emotional and psychological well-being of their users. The absence of a universally accepted definition of 'negative' in this context complicates matters, leading to inconsistent application of content moderation policies.

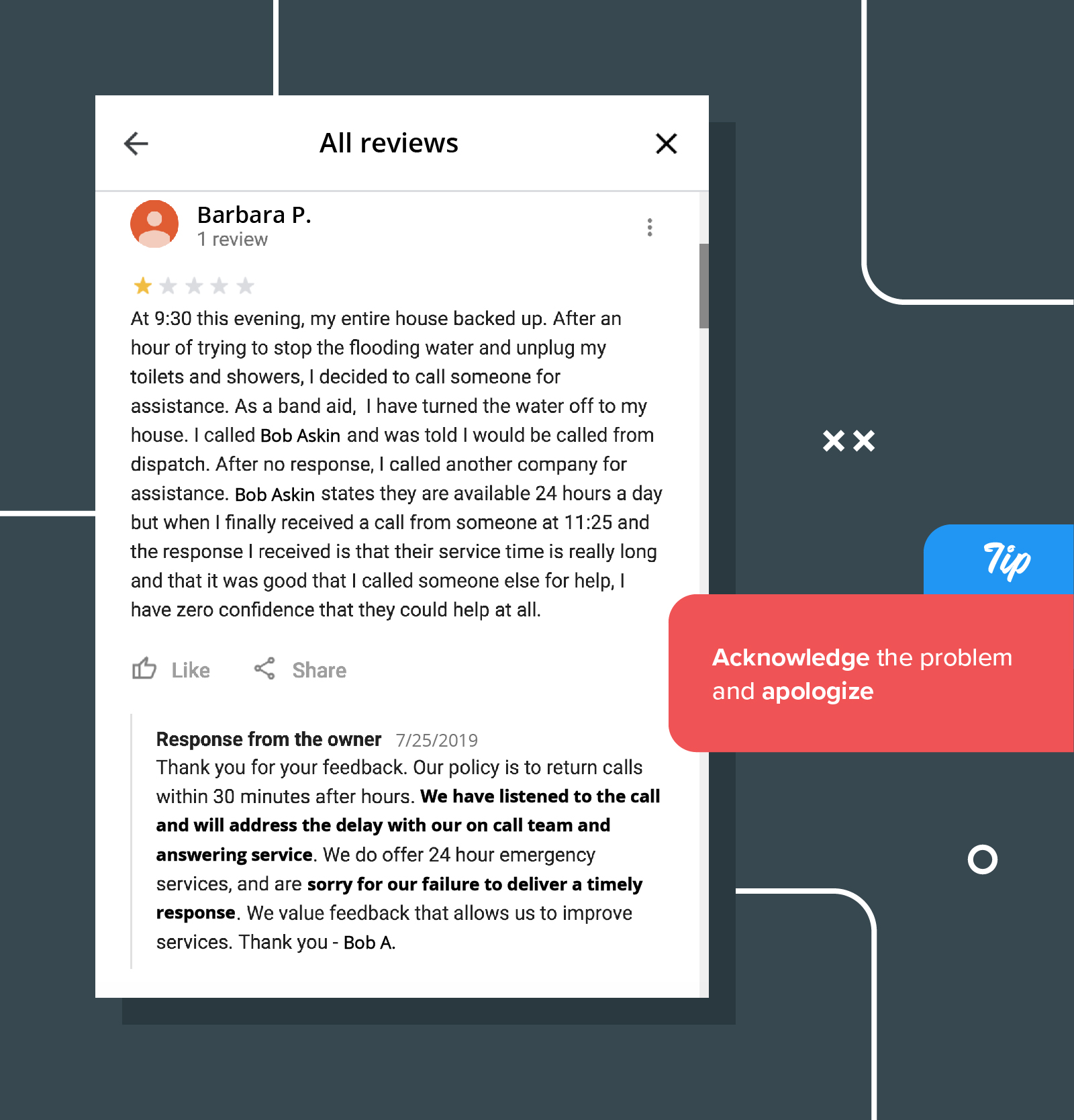

The Growing Demand for Content Removal

Calls for the removal of negative comments regarding background and personal profiles are growing louder, fueled by concerns about online bullying, doxxing, and the potential for real-world harm. These concerns are particularly acute for individuals from marginalized communities, who are often disproportionately targeted with discriminatory or abusive content.

Organizations like the Anti-Defamation League (ADL) and the Southern Poverty Law Center (SPLC) have long advocated for stricter content moderation policies to combat online hate and harassment. Their efforts have contributed to raising awareness among platform providers and policymakers regarding the potential consequences of unchecked online abuse.

Who is affected by these comments?

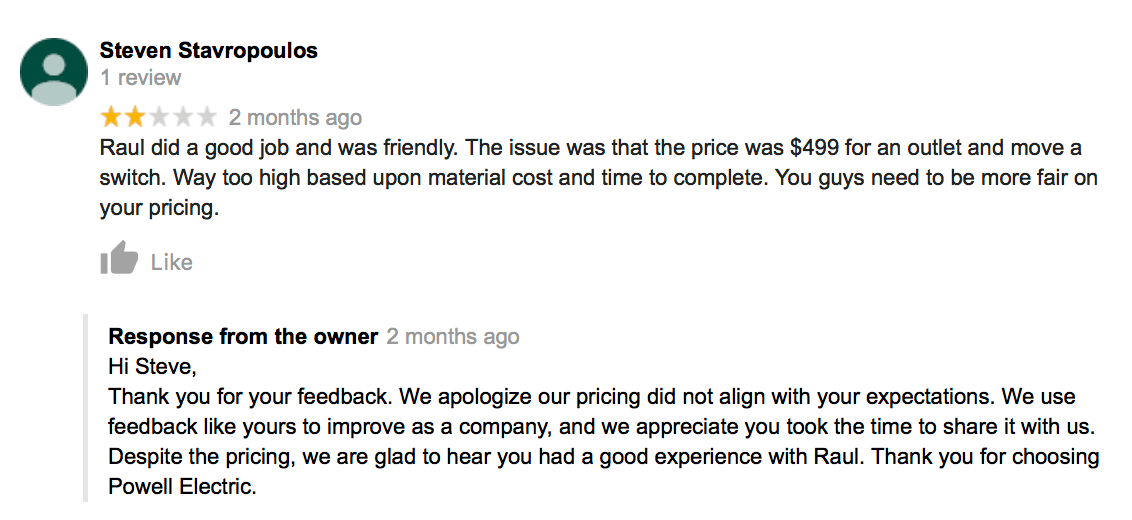

The impact of negative comments on background and personal profile affects a broad spectrum of individuals. Public figures, including politicians and celebrities, frequently face intense scrutiny and criticism online. Private citizens, however, are equally vulnerable.

Students, job seekers, and individuals simply expressing their opinions online can all become targets of negative commentary. The consequences can range from emotional distress and reputational damage to career setbacks and even physical threats.

What kind of content is being flagged?

The content being flagged typically includes comments that attack an individual's ethnicity, religion, sexual orientation, gender identity, disability, or other personal characteristics. It can also encompass the sharing of personal information without consent (doxxing), or the spreading of rumors and false accusations.

The line between legitimate criticism and harmful abuse can be blurry, making it difficult to establish clear guidelines for content removal. This ambiguity is further complicated by the diverse cultural and legal contexts in which online platforms operate.

Platform Responses and Challenges

Social media giants like Facebook, Twitter (now X), and YouTube have implemented various content moderation policies aimed at addressing online abuse. These policies often include provisions against hate speech, harassment, and the sharing of private information.

However, the enforcement of these policies is often inconsistent and faces significant challenges. The sheer volume of user-generated content makes it impossible for human moderators to review every post and comment.

Automated content moderation systems, while improving, are still prone to errors and struggle with nuanced language and cultural context. They also raise concerns about potential censorship and the suppression of legitimate expression.

"Our commitment is to provide a safe and respectful environment for all users. We are constantly working to improve our content moderation policies and enforcement mechanisms," said a spokesperson for Meta, the parent company of Facebook and Instagram, in a recent statement.

Legal and Ethical Considerations

The legal landscape surrounding online content moderation is complex and varies widely across jurisdictions. In the United States, Section 230 of the Communications Decency Act provides broad immunity to online platforms from liability for user-generated content.

This protection has been criticized by some who argue that it shields platforms from accountability for the spread of harmful content. Others defend Section 230, arguing that it is essential for protecting free speech and fostering innovation online.

Ethically, the debate centers on the balance between freedom of expression and the right to privacy and protection from harm. There is no easy solution, and finding a compromise that satisfies all stakeholders is a significant challenge.

The Future of Online Content Moderation

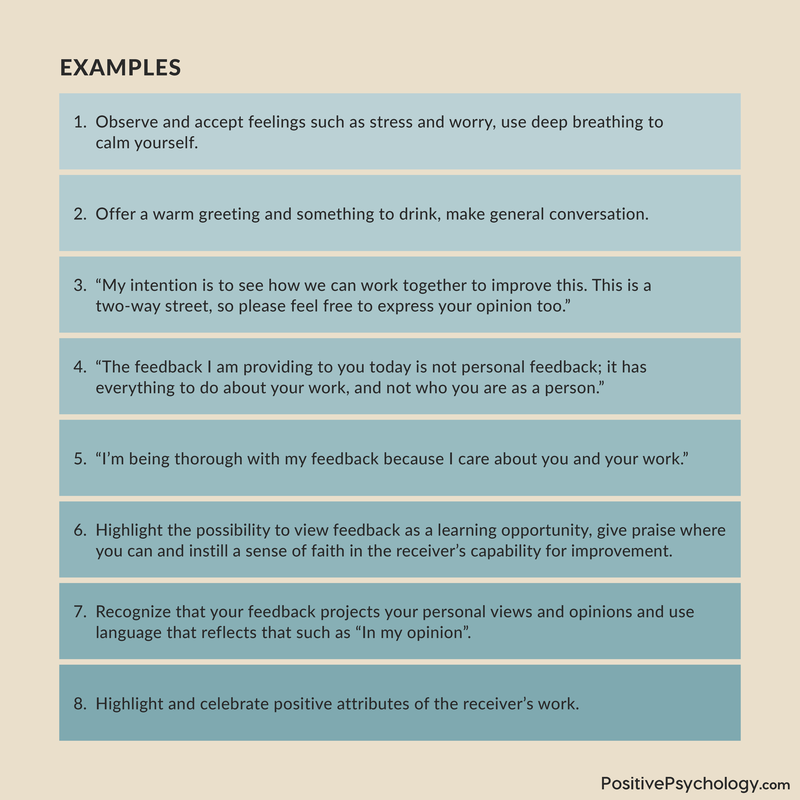

The ongoing debate about removing negative comments regarding background and personal profile highlights the need for more robust and transparent content moderation policies. This includes improved training for human moderators, more sophisticated AI-powered tools, and greater collaboration between platforms, researchers, and policymakers.

It also requires a broader societal conversation about the responsibilities of individuals in the digital age. Education and awareness campaigns can play a crucial role in promoting responsible online behavior and encouraging users to report harmful content.

Ultimately, addressing the issue of online abuse requires a multifaceted approach that combines technological solutions, legal frameworks, and ethical considerations. The goal is to create a more inclusive and respectful online environment where individuals can express themselves freely without fear of harassment or discrimination.

![Remove Negative Comments Regarding Background And Personal Profile How To Remove Negative Information From The Internet [2024]](https://reputationup.com/wp-content/uploads/2021/11/How-To-Remove-Negative-Information-From-The-Internet-ReputationUP-1.png)